An infamous 2016 article in Nature, reporting on a survey of 1500 scientists, begins with an alarming statement: "More than 70% of researchers have tried and failed to reproduce another scientist's experiments, and more than half have failed to reproduce their own experiments." A reader might immediately wonder why this is the case. Is it for nefarious reasons, because scientists work sloppily and falsify results? Or is there a much more mundane explanation: people attempting to reproduce these experiments' results lacked key pieces of information, details which could have contributed to a successful replication. With research software, this is often the case; something as seemingly minor as running an identical script on two different operating systems can generate completely different results.

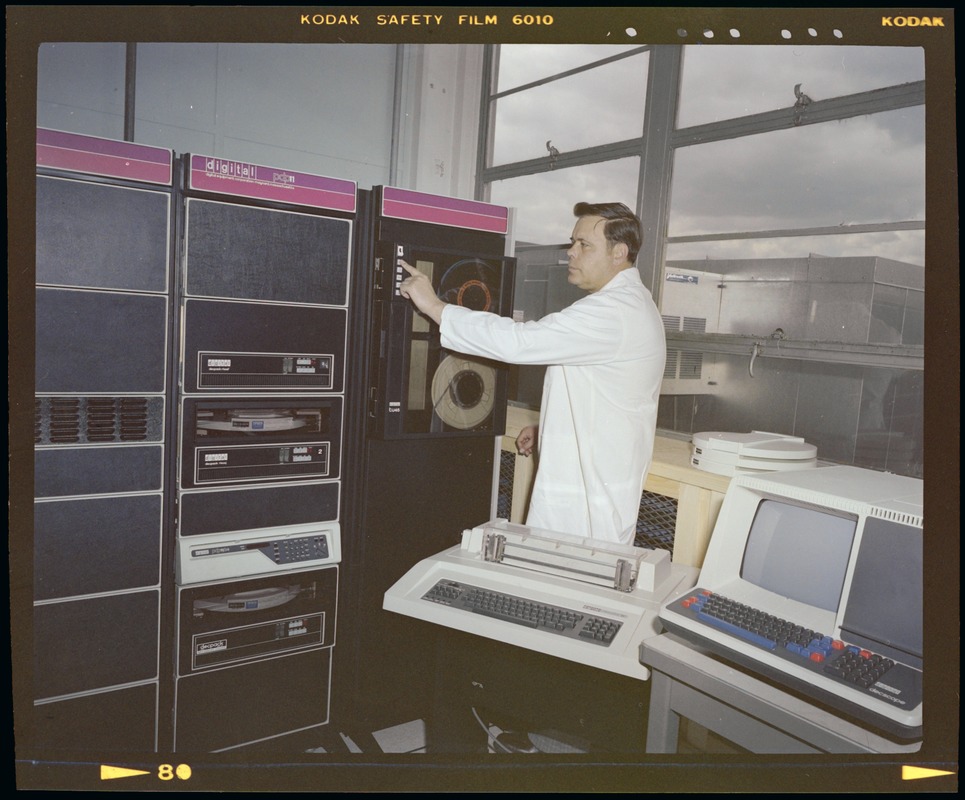

Image created by Scriberia for The Turing Way community and is used under a CC-BY licence. DOI 10.5281/zenodo.3332807.

Partially as a result of attention being drawn to the "replication crisis," many grants and journals have started to implement code sharing requirements alongside existing data sharing requirements. These requirements create challenges for scholars. While an academic paper in a text format might be readable forever, software is much trickier to share, preserve, and reuse. Losing the ability to run a piece of research software may lead to a range of problems, from a creator needing to redo work to restore the software to workable form to a paper being retracted because its conclusions can no longer be proven.This guide aims to answer questions and provide resources that will help you prepare your software so it's fit to share and publish.

If you are just beginning your project, please take a look at the Data Management Planning guide. Many of the ideas and tools that the guide discusses, such as good file organization, storage, and backup practices apply both to software and to data.

If you want to know more about open science and open access in general, please see the Open Access guide.

Here's what it boils down to: make sure your software will work on someone else's computer.

Note that "someone else's computer" might be a different location where you want to run your own software, such as the HPC (High Performance Computers).

Image CC BY. Retrieved from the Digital Public Library of America

Many of our recommendations in the following pages, particularly around documentation, will add time and effort. At the Libraries, we advocate strongly for open scholarship. We support students, staff, and faculty in publishing their data, code, and related documentation and materials in support of this belief, as well as to meet funder and journal mandates. We also acknowledge that open scholarship replicates some of the historical biases that exist in scholarship, academia, and the world more broadly, and are open to conversations about how we can work together with the NYU community to dismantle some of these barriers.

Ideals aside, here are some advantages that come with publishing reproducible code:

Let's say that again: you will benefit from making well-documented, reusable code. The less time you have to spend redoing or relearning what you did previously--or starting completely from scratch because you did not back up your work--the more you can focus on improving your work and building on your skills.

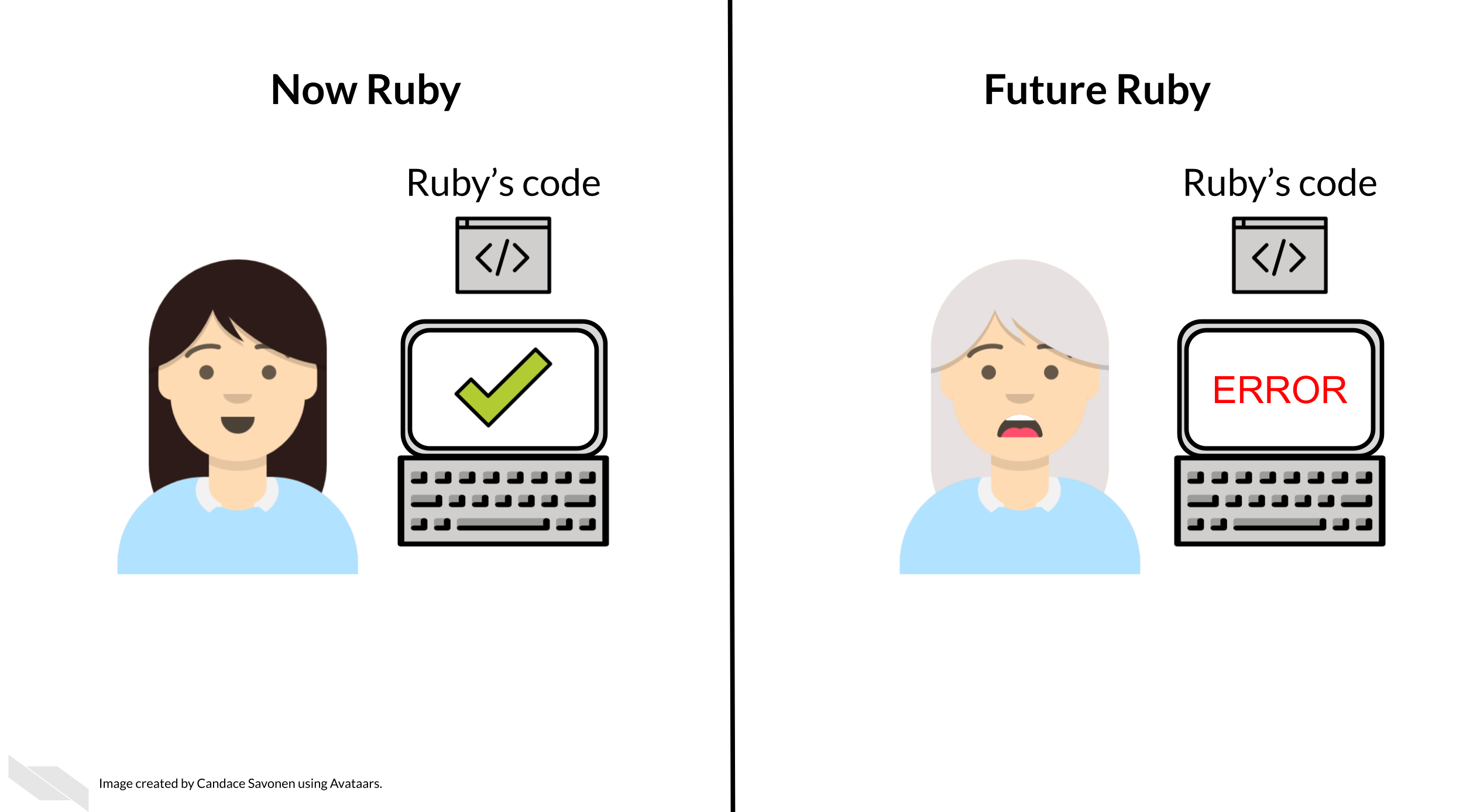

Image CC BY, retrieved from Reproducibility in Cancer Informatics.

There are a few reasons why we strongly advocate for open source software--we encourage you to apply open source licenses to your work, and to use open source products to the extent possible.